CS 488: Lecture 2 – Triangles and Transforms

Dear students:

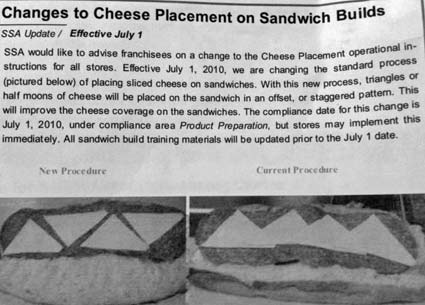

Last time we discussed plotting individual points to the framebuffer and the role of vertex and fragment shaders. Today we explore how to stitch those points together into filled shapes. I say filled shapes, but in reality, we will only stitch them together in triangles—never quadrilaterals, pentagons, hexagons, or anything else. We limit ourselves to triangles because any shape that’s more complex can be reduced down to triangles. This blurb about cheese placement on Subway sandwiches illustrates this fact:

Triangles have been studied for centuries, and their mathematical properties are well-known and relatively simple compared to other polygons. A triangle is necessarily confined to a plane. If we a shape has four points, there’s a possibility that they aren’t flat. Our graphics cards have circuitry optimized for the express purpose of computing values across a triangle. So, triangles it is.

Individual Triangles

Drawing triangles starts the same way as drawing points: we load up the graphics card with vertex attributes. The only part that differs is the draw call. Instead of gl.POINTS, we request gl.TRIANGLES. The graphics driver interprets the vertex buffer object as being a collection of vertex triplets rather than individual vertices.

To render a single triangle, we alter the functions initialize and render as follows:

function initialize() {

const positions = [

-0.5, -0.5, 0,

0.5, -0.5, 0,

-0.5, 0.5, 0,

];

const attributes = new VertexAttributes();

attributes.addAttribute('position', 3, 3, positions);

// ...

}

function render() {

// ...

vertexArray.drawSequence(gl.TRIANGLES);

// ...

}

What happens under the hood of the GPU is that each vertex’s clip space position is converted into pixel coordinates. Lines of pixels between the triangles edges are filled in by calling the fragment shader on each pixel. This process is called rasterization.

Interpolation

We’ve seen that we can assign attributes at the vertices, and these get fed into the vertex shader as in variables. So far we’ve only assigned the spatial position. Suppose we also want to assign a color. We start by adding a new attribute to our vertex buffer object:

function initialize() {

const positions = [

-0.5, -0.5, 0,

0.5, -0.5, 0,

-0.5, 0.5, 0,

];

const colors = [

1, 0, 0,

0, 1, 0,

0, 0, 1,

];

const attributes = new VertexAttributes();

attributes.addAttribute('position', 3, 3, positions);

attributes.addAttribute('color', 3, 3, colors);

// ...

}

When the pixels between the vertices get the fragment shader run, what color should be assigned? Those pixels right under the vertices should be red, green, and blue, probably, but what about the ones in the middle?

The GPU will interpolate vertex attributes as it rasterizes the triangle. When we interpolate, we fill in the gap between known quantities by blending between between them. To add interpolation to our rendering, we head to our shaders. In the vertex shader, we add an in variable for our new attribute. We also add an out variable to hold the known quantities that will get blended and sent into the fragment shader:

in vec3 position;

in vec3 color;

out vec3 fcolor;

void main() {

gl_Position = vec4(position, 1.0);

fcolor = color;

}

My scheme for marking a variable as something that gets blended is to prefix it with f—for “fragment.” I’m not necessarily happy with this practice, but I haven’t come up with anything better.

The out variables of the vertex shader correspond to the in variables of the fragment shader:

in vec3 fcolor;

out vec4 fragmentColor;

void main() {

fragmentColor = vec4(fcolor, 1.0);

}

The value dropped into fcolor is automatically a mathematical blend of the colors of the three vertices. We will use this automatic interpolation to achieve lighting, texturing, and other effects.

Transforms

We have a way of drawing static shapes made of triangles. To make them less static, we transform them. The most common ways to move our shapes are through scaling, translation, and rotation. We apply mathematical operations to the vertex positions to achieve each of these movements.

The transformation that we apply to our models is usually dynamic. Rather than update the positions in the vertex buffer object, we apply the mathematical operations on the fly in the vertex shader.

Scale

To scale a shape, we multiply its coordinates by the desired scale factors. If the scale factors are the same on every dimension, we say that the scaling is uniform. Uniformity is not required.

In our vertex shader, we accept the scale factors as a uniform, which is an unfortunate word collision. In GLSL, “uniform” means the same for every vertex. This is in contrast to vertex attributes. We then use the factors to compute our position:

uniform vec3 factors;

in vec3 position;

void main() {

gl_Position = vec4(

factors.x * position.x,

factors.y * position.y,

factors.z * position.z,

1.0

);

}

There are better ways to write this; we’ll see them later. But we haven’t discussed vectors and matrices yet.

On the CPU side, we need to provide values for factors, which we’ll do in the render method:

shaderProgram.bind();

shaderProgram.setUniform3f('factors', 1.5, 0.5, 1);

Translate

Translating is shifting an object from one location to another. Instead of multiplying by factors, we add offsets. Otherwise, the code is very similar to scaling:

uniform vec3 offsets;

in vec3 position;

void main() {

gl_Position = vec4(

position.x + offsets.x,

position.y + offsets.y,

position.z + offsets.z,

1.0

);

}

On the CPU side, we supply values for offsets:

shaderProgram.bind();

shaderProgram.setUniform3f('offsets', 0.5, -0.2, 0);

Rotate

Rotation is more complex than scaling and translating. We will limit ourselves to 2D rotation in the Z = 0 plane for the time being. To figure out the mathematical operations of rotation, we first recognize that a point $p$ can be expressed either in Cartesian coordinates or in polar coordinates as a radius and angle:

To convert from polar coordinates to Cartesian, we apply our trigonometric knowledge:

Now we frame the problem of rotation. We have an existing point $p$ and we want to rotate it $b$ radians to compute $p’$. We can express $p’$ in polar coordinates, with the angle being the sum of the original angle $a$ and the additional angle $b$:

See those sums in our sine and cosine calculations? Well, we can rewrite those thanks to some handy trigonometric identities that you may not remember. These are the sum and difference identities:

We rewrite the definitions of $x’$ and $y’$ using these identities:

Now we can simplify a bit. We distribute $r$:

See that $r \cos a$? That’s what we defined $x$ as earlier. Let’s substitute these in:

And that’s our formula for rotating. Let’s implement it on our vertex shader:

uniform float radians;

in vec3 position;

void main() {

gl_Position = vec4(

cos(radians) * position.x - sin(radians) * position.y,

sin(radians) * position.x + cos(radians) * position.y,

position.z,

1.0

);

}

On the CPU side, we supply a value for radians:

shaderProgram.bind();

shaderProgram.setUniform1f('radians', 0.1);

Horizon

We’ve seen how to manually transform our shapes. Our approach suffers from a few problems. For one, it’s going to get really messy if we try to apply more than one transformation. For another, we’re not exploiting the fast hardware of the GPU. We will fix both of these problems by recasting this math as vector and matrix operations.

TODO

Here’s your very first TODO list:

- Read Fundamentals and How It Works from webgl2fundamentals.org.

- Start the Rastercaster homework.

- Complete the first quiz before Friday.

See you next time.

P.S. It’s time for a haiku!

I made a black hole

Physics is hard, but not math

Just scale by zero